Best Data Pipeline Tools 2023 | Selection Guide

April 2023 | 10 min. read

Table of Contents

A data pipeline is a system or process that takes raw data from a source, transforms it into a desired format, and loads it into a destination system such as a database, data warehouse, or analytics platform. This series of steps is usually automated and scheduled to execute at a consistent frequency.

A data pipeline tool automates the most time-consuming steps of creating and maintaining data pipelines. Most vendors provide their solutions via managed infrastructures, so customers can avoid maintaining complex servers internally.

For teams that move high volumes of data – using a data pipeline tool typically saves months of time, hundreds of thousands of dollars in engineering labor costs, and empowers data teams with advanced capabilities which otherwise requires specialized data science personnel.

Data Pipeline Selection Guide

Data teams tend to look for a pre-built data pipeline tool when they realize that vendor costs are a fraction of the expenses of maintaining pipelines internally.

Setting up ingestion, designing architecture, transforming data, and constantly updating in-house infrastructure diverts personnel away from higher value work. Modern data pipeline tools solve this problem by providing low-code, high automation solutions that slash set-up timelines from weeks to minutes, and leave you with virtually zero maintenance efforts.

Still, data pipeline tools are a large investment that affect day-to-day processes. Contractual commitments to vendors may leave you with a hefty monthly bill. Therefore, it is wise to evaluate the exact needs of your data projects to ensure the value vs. cost is clearly positive.

As data demands have evolved far beyond simple ETL, so have the tools available to move and extract value from data.

Purchase decisions must now take into account if solutions support:

- Real-time streams v.s. batch processing

- Unstructured v.s. structured data

- Internal vs external sources

- Basic transformations or advanced enrichment capabilities

This article compares general purpose and niche tools to help you find the best-fit solution for your current data goals.

Top Data Pipeline Tools Summary

- Top data pipeline tool for unstructured and semi-structured data: Datastreamer

- Top data pipeline tool for moving internal structured data: Fivetran

- Top open-source data pipeline tool for enterprise data governance: Talend Open Studio

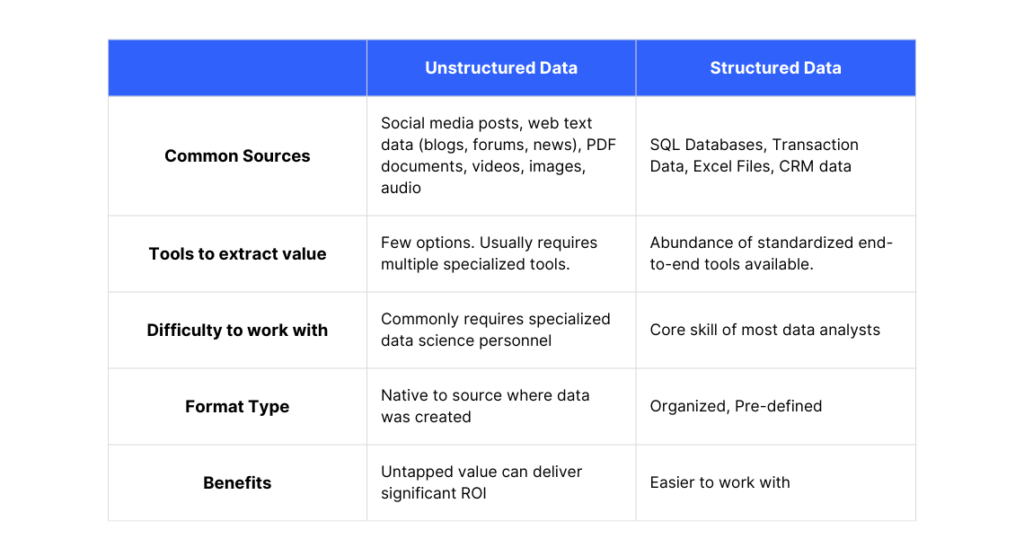

Unstructured Data vs. Structured Data

There is an abundance of tools designed to easily work with structured data, but the options for unstructured data have historically been limited and usually require specialized IT personnel to operate them.

However, recent advancements in AI and NLP have given rise to tools that make it effortless to convert unstructured data into a useful format. With these rising innovations, data professionals can leverage unstructured and semi-structured data as easily as structured data.

Some tools on this list support unstructured data processing, but require heavy customization (which defeats the purpose of pre-built solutions). If you anticipate to be working with unstructured or semi-structured data, it’s advisable to choose a solution that is specialized to handle this data type.

💡The Rise of Unstructured Data

Gartner predicts that by 2025, 70% of organizations will shift their focus from big data to wide data, most of which is unstructured. Demand for unstructured text data is growing rapidly to power use cases in generative AI, media monitoring, document management for law firms and banks, and more.

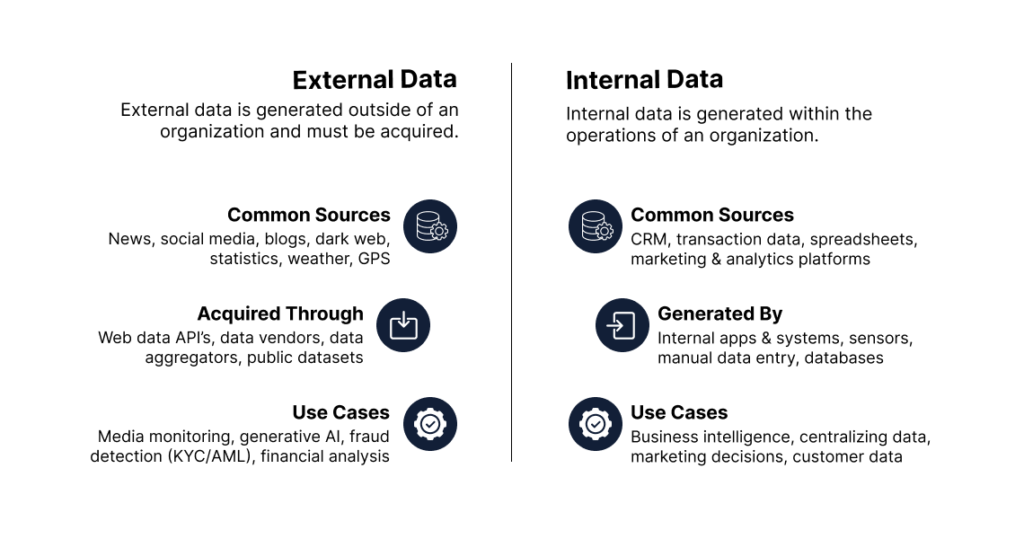

External Data Sources vs. Internal Data Sources

External Data

In a Deloitte survey, 92% of data professionals said their firms needed to increase their use of external data. The majority of third-hand data leveraged by enterprises is procured from data vendors, data networks, or directly from social media/website API’s. These data vendors tend to focus on the individual use-case they serve.

So if your data strategy includes multiple external sources you may encounter a gap between the data you receive and the final business outcome you are looking to achieve.

By choosing a data pipeline tool that also supports external data integration you can automate work in data procurement, data enrichment, and data movement to free up your analysts to concentrate on uncovering actionable intelligence.

Internal Data

Internal data is generated within and already owned by an organization. There may be some co-ordination required between departments to move data but the hassle of procurement is significantly less than external data.

Data pipeline tools support internal data integration through “pre-built connectors” to accomplish automated pipelines within a few minutes. Reviewing the connectors that a vendor provides is often a key consideration when deciding on a purchase, so take the time to browse through them beforehand. Don’t forget to take into account the scalability and customization potential of the platform under review and how it fits into your long-term IT roadmap.

Best Data Pipeline Tools Comparison

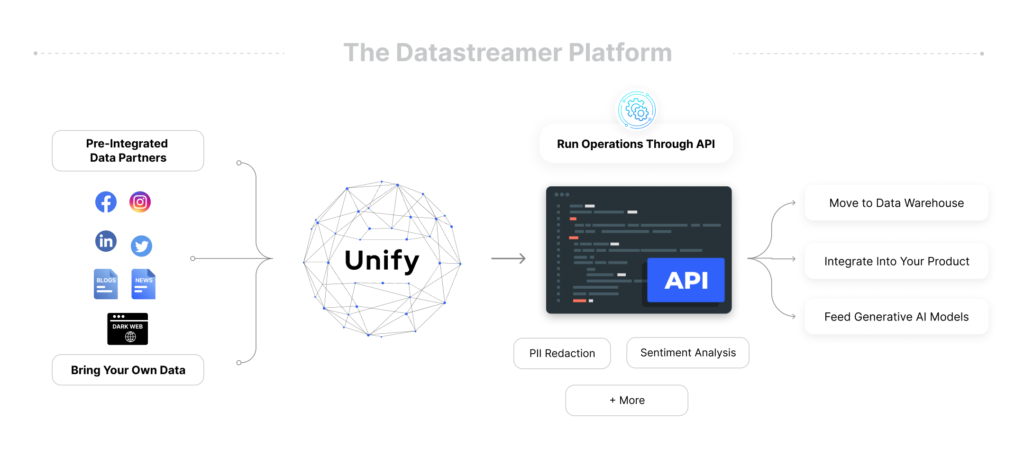

1. Datastreamer

Datastreamer is a platform to craft pipelines for unstructured, semi-structured, and external data. Specializing on real-time streaming for complex data types sets this tool apart from other major vendors that have limitations in this area. Pre-built components help data teams get live in minutes, or craft your own sophisticated pipelines with up to 95% less work than building in-house.

Best For:

Data teams looking to leverage unstructured and semi-structured data from multiple sources, such as sourcing external data for media monitoring, KYC/AML, or feeding generative AI models.

Key Features:

- Build data streaming pipelines in minutes with a low-code API interface and pre-built components.

- Simplify data procurement with a network of data providers or add your own vendors to manage your data portfolio in one place.

- Transform multiple unstructured data sources into a common schema to aggregate, search, filter, and run powerful operations.

- AI models for enrichment including sentiment analysis, content classification, PII redaction and more.

- Plug into a managed infrastructure that is cost-optimized to handle massive volumes of text data.

Data Sources & Destinations:

- Pre-integrated data partners for external data sources such as: social media, news, blogs, dark web data, and more.

- Connect any data vendor or bring your own internal data through a REST API.

- Integrate previously unstructured and semi-structured data into data warehouses, power analytics products, or feed AI models.

Pricing Overview:

- Datastreamer uses a consumption based pricing model with volume discounts.

- Billing is determined by the volume of data processed and components that you have deployed.

- A platform fee unlocks access to all features.

Customer Success Highlight:

“We tried doing this ourselves and it really took time away from our team. Datastreamer has made our analyst’s life easier, and really broadened the scope of what we’re able to offer. It’s taken a burden off our team and allowed them to do their job better – figuring out what’s important to clients and giving them that information as quickly as possible.”

Case Study Highlights:

- 3 Billion+ pieces of unique content sourced and analyzed monthly to elevate the accuracy and speed of media monitoring.

- Datastreamer handles 95% of data indexing requirements so analysts can run precise queries on massive text data from various sources.

2. Fivetran

Fivetran is a trusted name in the data pipeline industry, offering a comprehensive platform that is ideal for transferring internal structured data. This solution is primarily built to handle ELT workflows but also supports ETL. Some connectors support real-time event streaming with a Change Data Capture (CDC) mechanism. Support for unstructured data is limited and requires heavy customization by the user. As an industry leader, Fivetran commands higher prices but makes up for this with wide expandability to ensure enterprise scalability.

Best For:

Enterprise-sized organizations looking to move high volumes of data generated within their company, such as from multiple SaaS apps into a centralized data warehouse.

Key Features:

- Pre-built data models for no-code transformations or custom data models with native integration to dbt Labs (data transformation tool).

- Automated data movement, schema drift handling, data normalization, deduplication, data orchestration, and more.

- Fully managed cloud, hybrid, and self-hosted options.

- Enterprise scale data governance and security features.

Data Sources & Destinations:

- Pre-built connectors for 300+ data sources including cloud databases, data lakes, and popular SaaS applications like Google, Salesforce, Shopify, development tools + more.

- Data destinations include data warehouses and other databases such as BigQuery, Snowflake, Databricks, Panoply, SQL Servers + more.

Pricing Overview:

- Fivetran uses a consumption based pricing model with volume discounts.

- Data volume is measured through Monthly Active Rows.

- There are several pricing tiers that unlock additional features.

Customer Review Highlight:

“A useful tool for ETL/ELT pipelines, it automatically pushes and loads data from sources to destinations, saving me time. I’ve also used it to perform some SQL data transformations and ingest data into data warehouses like DBT and Snowflake… Price can be high as the data increases immensely”

3. Hevo Data

Hevo Data is a strong contender to Fivetran with greater flexibility in pricing that makes this a compelling alternative. As with Fivetran, internal structured data integration is the central focus of this solution. In direct contrast to Fivetran, Hevo prioritizes ETL but does support ELT processes as well. Hevo enables real-time data streaming from internal sources to data warehouses, but it lacks the capability to perform AI enrichments in-transit, which is crucial for analytics purposes. Unstructured data is not supported by this solution.

Best For:

Companies looking for a budget friendly yet well established pipeline tool to move internal data within their organization. This solution offers monthly plans with greater pricing flexibility, but it does not offer the same level of platform expandability as Fivetran.

Key Features:

- Visual interface to create pipelines without writing code.

- Automated data movement, schema management, pre or post load transformations.

- High frequency updates (5-minute syncs) even on lower pricing plans.

- Generous free plan with 50+ free connectors to run small data movement efforts.

Data Sources & Destinations:

- Pre-built connectors for 150+ data sources including cloud databases, data lakes, and popular SaaS applications like HubSpot, Facebook Ads, Shopify, development tools + more.

- Data destinations include data warehouses and other databases such as BigQuery, Snowflake, Databricks, SQL Servers + more.

Pricing Overview:

- Hevo data uses a consumption based pricing model with discounts based on volume.

- Data volume is measured through changes to records.

- There is a main paid tier with access to all core features.

Customer Review Highlight:

“Saved us 1000s of dollars compared to other tools. We use Hevo mainly to move Salesforce data to/from Salesforce. Saved engineering time by using Hevo transformations. Other vendors wanted us to pay extra for 15 minute syncs for Salesforce, while Hevo offered us 5-minute syncs by default.”

4. Segment

The data pipelines of Segment’s customer data platform are designed exclusively for internal customer and marketing data, offering a specialized solution that stands out in this niche. Segment excels at helping companies extract value from internal website data, mobile data, and payment data from within a single platform. Segment does not support advanced AI enrichments of externally acquired data, so teams looking to mine third-hand data for marketing and product insights may have integrate an additional tool.

Best For:

Marketing and product teams looking for data pipelines purpose-built to activate the value of internal web, mobile, and internal customer data.

Key Features:

- A single API for engineering teams to unify data from customer touch points across all platforms and channels.

- Feed omni-channel campaigns with real-time data for improved performance metrics.

- Data governance and regulatory compliance features that are critical for managing privacy sensitive customer data.

Data Sources & Destinations:

- Pre-built connectors for customer data sources including web pixels, mobile app data, cloud servers and more.

- 400+ data destinations including data warehouses, analytics platforms, ads platforms, email marketing platforms, and other SaaS apps.

Pricing Overview:

- Segment offers various pricing tiers and custom pricing for large enterprise use-cases.

- Data volume is measured through monthly “visitors” (users whose data is captured and stored).

Customer Review Highlight:

“Segment has been a game changer. It has sped up our third party integrations as we no longer need to rely on the development team to do them. It has improved the accuracy of our data: sending the same data to all tools, something that was previously impossible with 1-to-1 integrations.”

5. Talend Open Studio

Talend is a data management platform that includes data integration as one of their products. Talend Open Studio is a free open-source choice to create basic ETL pipelines. This tool is recognized by data teams as a powerful tool that delivers impressive functionality for a free option. On the other hand, there is no official support included and maintaining the solution requires considerable internal efforts. The paid versions of this tool resolve these limitations and deliver enterprise-grade functionality.

Best For:

For organizations with very basic ETL projects in mind, the zero vendor cost associated with this tool is attractive. Ensure that your IT team has the bandwidth to properly maintain this solution without slowing down other roadmap items.

Cons of Free, Open-Source Data Pipeline Tools:

- Because they have fewer pre-built components and receive infrequent official updates, free tools require significant upfront configuration and regular adjustments.

- Migrating to a paid tool down the road can disrupt operations and create a large workload to manage the transition.

- There are still costs associated with processing data, and the hosting infrastructure must be set up by your team.

Key Features:

- Execute simple ETL and data integration tasks and manage files from a locally installed, open-source environment that you control.

- Built-in components for ETL including string manipulations, automated lookup handling, bulk loads support, and more.

- Talend has a suite of data governance, application integration, and cloud pipeline tools that are well suited for large enterprises.

Data Sources & Destinations:

- Connectors for packaged applications (ERP, CRM), databases, SaaS apps and more.

- Data destinations include data warehouses, data marts, intelligence dashboards, OLAP’s, and more.

Pricing Overview:

- Free and open-source with the ability to upgrade into paid tiers for enhanced functionality.

Customer Review Highlight:

“A great ETL tool at no cost. Talend Data Integration is used across our organization. We perform Extract, Transform, and Load using Talend. We integrate various systems such as Oracle Database, Clarify, Salesforce… We also migrate a lot of data between the system on a one-time basis.”

About Datastreamer

Datastreamer removes 95% of the work required to transform unstructured data from multiple external sources into a unified analytics-ready format. Source, combine, and enrich data effortlessly through a simple API interface to save months of development time. Plug existing components from your pipelines into our managed infrastructure to scale with less overhead costs.

Customers use Datastreamer to feed text data into AI models and power insights for Threat Intelligence, KYC/AML, Consumer Insights, Financial Analysis, and more.