How to Standardize Data from Different Sources: Tools, Steps + Examples

Juan Combariza

December 2023 | 7 min. read

Table of Contents

What is data standardization?

Data standardization refers to the process of converting data values into uniform formats. This practice ensures consistency within the attributes of datasets, facilitating effective analysis and management.

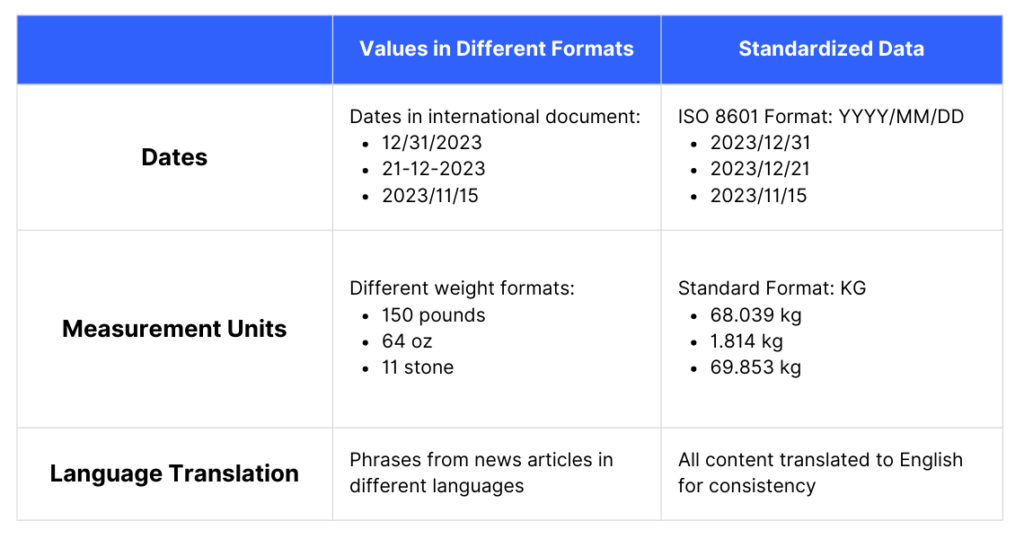

Examples of data standardization include:

- Converting dates from various formats into a single standard format (e.g. YYYY-MM-DD) across all datasets.

- Converting various measurement units to a single standard, such as changing all distances from miles to kilometers.

- In multinational datasets, translating or converting all data to a single language to maintain consistency.

Why standardize data from different sources?

The task of data standardization, typically executed by data professionals, ensures data is appropriately prepared for accurate analysis and efficient storage.

Data standardization is one of the tasks within the broader process of data preparation. This step, which includes other components like data cleaning and data normalization, is so vital in the field of big data that it’s often cited to occupy 70% of the time spent by data professionals.

What happens if I don’t standardize data?

- Incompatibility with Machine Learning: When different sources are not standardized, ML models may misinterpret the data, leading to biased or inaccurate predictions.

- Impaired Analytics: Analytics tools rely on standardized data for accurate analysis. Non-standardized data can skew analytics results, leading to incorrect insights.

- Complications in Data Integration: Merging data from diverse sources like social media, web data, and databases requires a unified format. Without this, integrating these datasets can become a complex, error-prone process, resulting in incomplete or misleading datasets.

Standardizing External Unstructured Data

In today’s digital landscape, a significant volume of valuable data comes from external, unstructured sources such as social media, blogs, news websites, and PDF documents.

Unstructured data lacks a predefined format or organization, making it inherently more difficult to standardize than structured data.

Specialized tools for unstructured can streamline manual standardization processes.

Steps to Standardize Data

Data standardization is typically comprised of the following common steps:

1. Understand the Data Sources

- Identify Data Sources: Start by identifying all the different data sources. These can be databases, CSV files, APIs, or even manual records.

- Assess Data Quality: Evaluate the quality of data from each source. Look for issues like missing values, inconsistent formats, or outliers.

2. Define Standardization Rules

- Develop a Data Dictionary: Create a data dictionary that defines standard formats, naming conventions, and acceptable values for key data elements.

- Set Data Type Standards: Standardize data types across sources (e.g., date formats, numeric formats, text encodings).

3. Data Cleaning

- Remove Duplicates: Identify and eliminate duplicate records to ensure data uniqueness.

- Handle Missing Data: Decide on a strategy for missing data – whether to impute, delete, or flag these entries.

4. Data Transformation

- Normalize Data Formats: Convert data into standardized formats. For example, change date formats to a single standard (YYYY-MM-DD), or unify text case (e.g., all lowercase).

- Scale and Normalize Numeric Data: For numerical data, apply scaling or normalization to bring different ranges into a comparable scale, especially important for machine learning models.

5. Integration and Consolidation

- Merge Data from Multiple Sources: Combine data from different sources into a single, integrated dataset. This might involve joining tables, concatenating datasets, etc.

- Resolve Conflicts: In case of conflicting data from different sources, establish rules to resolve these conflicts (e.g., which source takes precedence, or how to average values).

6. Quality Assurance

- Validate Standardized Data: Perform checks to ensure that the data standardization has been correctly implemented.

- Audit Trails: Keep records of data transformations and standardization processes for traceability and future audits.

Tools to Standardize Data

This section delves into various solutions, ranging from manual solutions that offer granular control, to cloud-based platforms that automate processes for large-scale data handling.

We also explore specialized solutions designed specifically for transforming unstructured data – such as streams of social media & web data or files with inconsistent formats.

Pre-Built Tools for Data Standardization:

ETL Tools (Talend, Informatica, Apache NiFi):

These tools typically offer significant out-of-the-box functionality that reduce the need for extensive manual coding. They come with user-friendly interfaces and pre-designed components that simplify the process of data transformation (including standardization).- Open Source: Apache NiFi is an open-source ETL tool for data standardization, offering flexibility and community support.

- Unstructured Data: These tools are generally more suited for structured and semi-structured data.

Cloud-Based Pipeline Solutions (Datastreamer, Google Cloud Dataprep, Azure Data Factory):

Expanding on traditional ETL functionality, pipeline solutions also offer built-in connectors and automated data pipelines, which enable users to standardize data with minimal manual intervention.- Unstructured Data: Pipeline solutions like Datastreamer are built to handle unstructured data, equipped with AI components specific to standardizing unstructured data with inconsistent source formats.

- Unstructured Data: Pipeline solutions like Datastreamer are built to handle unstructured data, equipped with AI components specific to standardizing unstructured data with inconsistent source formats.

Data Quality Management Software (Informatica Data Quality, SAS Data Management, IBM InfoSphere):

These are comprehensive, pre-built data quality solutions. They offer a range of functionalities like data profiling, cleaning, and validation with less need for manual customization.- Unstructured Data: These tools primarily focus on structured data. While they offer some capabilities for unstructured data, such as text data, their strength lies in handling structured datasets.

Data Standardization Tools (Requiring More Manual Customization):

SQL and Database Management Systems (MySQL, PostgreSQL, Microsoft SQL Server):

While these systems are powerful for data management, they typically require more manual effort to write and execute SQL queries for data standardization tasks.- Open Source: MySQL and PostgreSQL are open source, offering the flexibility and community support that comes with open-source software.

- Unstructured Data: Traditional relational database management systems are primarily designed for structured data. Handling unstructured data often requires additional tools or software.

Programming Languages – Python and R:

Both Python and R are programming languages that offer extensive libraries for data manipulation. However, they require a good amount of manual coding and customization to perform data standardization tasks.- Open Source: Python and R are both open source, making them highly popular in the data science community due to their flexibility and extensive community-driven libraries and support.

- Unstructured Data: Both Python and R can handle unstructured data thanks to their extensive libraries (such as NLTK and TensorFlow in Python, and tm and text2vec in R).

In-Depth Example of Data Standardization

Here is a detailed case study of a Global Financial Services Firm to demonstrate the practical application and significance of data standardization.

A Global Financial Services Firm – Numeric and Financial Data Standardization

Data Sources to Standardize:

- Stock Market Feeds: Real-time data from multiple stock exchanges around the world, each with its own set of reporting standards and currency.

- Banking Records: Transaction records from banking operations in different countries, each with unique formats and currency representations.

- Regulatory Filings: Financial data from regulatory filings which vary significantly from one jurisdiction to another.

- Market Research Data: Economic and financial market research data from various global sources, often in different formats and languages.

- Internal Financial Records: The firm’s own financial records, including budgets, forecasts, and internal financial analyses.

Standardization Challenge:

- Currency Conversion: The firm must standardize financial data involving multiple currencies into a single base currency for consistent financial reporting and analysis.

- Consistent Reporting Standards: Aligning financial reports from various global operations to a single standard, such as International Financial Reporting Standards (IFRS).

- Interest Rate and Financial Ratios Standardization: Harmonizing various financial metrics like interest rates, return on investment (ROI), and debt-to-equity ratios across different markets for uniformity.

Impact of Standardization:

- Enhanced accuracy and consistency in financial analysis and risk assessment.

- Streamlined processes for reporting, ensuring compliance with international regulatory standards.

- Improved efficiency in consolidating and comparing financial data from diverse international sources.

Data Standardization FAQ’s

Data standardization and data normalization are distinct yet complementary processes in data management. Data standardization involves transforming data from various sources into a common format, such as converting dates from different formats to a standardized one.

On the other hand, data normalization in a database context refers to organizing data to reduce redundancy and dependency, such as splitting a large table with customer and order details into two separate tables for customers and orders, linked by a customer ID.

While standardization ensures consistency across datasets, normalization optimizes the database structure for efficient data storage and retrieval.